Withdrawal - Remote Ripples (2020)

My work is different now in all but name from the original planned work. The original work withdrew itself from the viewer whereas the title now refers to the withdrawal of the viewer from the artwork. The artwork connects people, in a gallery space art is viewed and interacted with communally so I wanted that to be the case on the online work I made. While this could be installed in a gallery, I think the whole point is that it isn’t. If people are in a space with the work, the majority of the technology becomes redundant and therefore so does the theory. The piece is about the real-world manifestations of computing and cyber space. The misnomer of the ‘cloud’ being some entity that exists not in the physical world when in reality the infrastructure is tangible.

The work takes the form of a live stream which can be influenced by clicking on the screen, an action that would normally have no influence on the stream. The stream is of the reflections from a pool of water. The actions of the user cause small magnetic actuators to be triggered to splash the water. The reflections are captured rather than the water directly as this amplifies the effect the actuators have. When the user clicks, an animation begins which fills the time caused by the latency of the live stream. The animation is a reverse ripple, A circle surrounds the clicked position and shrinks as it moves to the point that will eventually be the start of the real ripple. This directly connects the click with the eventual ripple. These circles are synced between browsers so if someone else is also logged on their circles will appear on your screen and visa versa. In this way multiple people can experience the artwork together while being distant from one another.

I was trying less to communicate with the audience, and more to connect my audience with each other. Something I find myself searching for in online artwork a sense of connection to other viewers. Each user becomes a session on a server and is closed off from everyone else even if they are experiencing the work at exactly the same time. I think the magic of my work comes from seeing other people's circles moving around in real time.

Making-Of

My work has changed enormously since It will no longer be presented in the gallery as I had expected. My original work would have relied incredibly heavily on real time sensors detecting people in physical proximity to the piece. The work was meant to imbue a machine with human qualities in order to create self-reflection in the viewer. I had found in the past that watching a machine mimic human behaviours can amplify those behaviours somehow. The piece would have been called Withdrawal because the machine would be withdrawing from the human. I was recently sent a piece of work which just happened to be along the lines of what it was I wanted to build originally. Ken Rinaldo's Autopoeisis (2000) The strength in this piece is the physical motion of the arms using a bespoke set of infrared sensors which could not easily be mirrored with a virtual version. I think the same could have been said of my original piece.

It took about a month if not longer for me to come to terms with the fact that I could no longer continue this project from lockdown as none of the techniques of interaction I explored could match in any way the physical interaction that the piece was founded on. I therefore decided to leave that as a physical idea for a later date and focus on something that was more manageable at home and also compatible with our socially distanced state. It was only later that I realised it might be worth this still having a physical interaction.

I had been beginning to work on a residency at Arebyte gallery at which I proposed to use my expertise and equipment for video streaming to link the physical work and discussions taking place in the gallery with the online residency. It had also been my intention to make a work in the gallery that was somehow controlled from the online presence, to connect Arebyte’s and the residency’s online presence with the real space. I had however imagined this would be some kind of machine that had freedom of movement in the gallery.

I was struck with the idea of streaming though as it was something I have a lot of experience with and expertise in. It is also something I intend to work with for a living after I finish my studies. I was reminded of a few pieces I had seen a few years ago where a collective of players all watching a livestream could vote on what button to press next, playing single player games cooperatively. The most notable of these was probably ‘Twitch Plays Pokemon’ (article here) which took 16 days but was completed successfully.

A more direct approach was used by a Youtuber called Useless Duck Company who made a talking banana that would read out chat messages (article here). This project actually became a hugely sophisticated censoring algorithm when the chat was taken over by spammers filling the chat with expletives and slurs.

More broadly I have been interested in the interaction between cyberspace and the physical world since reading Tubes by Andrew Blum about the infrastructure that makes up the internet. In his book Bulm explores the physical infrastructure that makes up the internet by tracing it back from the wire that connects to his computer, all the way back to the internet exchange and to the undersea cables that connect continents.

I also read about the stuxnet virus (Wired Article) (New York Times Article) and watched the Zero Day (2016) documentary by Alex Gibney. This was a case where a virus that can move from system to system was built to infect computers which monitor Iran’s nuclear centrifuges. The virus was designed to target specific ‘PLCs’ (Programmable Logic Controllers) which were used to control the operation of the centrifuges. This control was then used to destroy the centrifuges. This is the most covert of operations because the machinery is destroyed effectively from the other side of the world, all via pre-existing, open infrastructure.

A favourite art piece of mine that I think comments on both the disperse nature of internet networks but the fallibility of the network. Summer (2013) by Olia Lialina is a set of images which make up a gif animation which are dispersed among servers around the world. Each redirecting to the next to form a continuous loop. Normally this loop is continuous however at the time of writing, one frame is currently not accessible and therefore the chain is broken and not self sustaining.

I was able to begin work properly when lockdown loosened and I was able to travel back to London and collect a good deal of stuff from my flat including prototype solenoid driver boards I had made for an earlier project. Once I had this kit I was able to start iterating the setup I would use for the piece. My technical progress with this project took place in two streams. One stream was building a system for communicating between browser windows over the internet and having these messages in turn be received by an ESP8266 Arduino-Like board. The other stream was building the physical infrastructure to create a real life effect and capture that for the online platform.

Software

I ported over some code from a project I did at the start of lockdown - an online card game. This game worked over websockets using a node.js server so it was easy for an ESP8266 to also join this server and receive the messages. This worked just fine as a test, including using twitch.tv as a background locally. The problems began when I started to set this up to be accessible remotely. I used an Amazon Web Services instance that I had set up for a previous project with node.js installed to run the server. This worked really well for a couple of early tests but then something changed with how twitch allows embedding. Suddenly having been working well the embed was throwing errors. I found the problem to be that twitch no longer allows embedding on insecure sites.

I therefore had two options of how to proceed. I could switch from twitch to another platform for streaming or rewrite the site to be secure. I determined that securing the site would be a bigger undertaking so I started searching for other comparable streaming platforms. I was hesitant to leave twitch as it functions as a single channel so there is no need to update the site every time a new stream begins. It also has the lowest latency of options I had tested and I was determined to keep latency low to help the fluidity of the interaction. I tried youtube but I kept having issues with my live streams being taken down as soon as I embedded them so I assumed this wasn’t a usable system. I found another AWS package which allowed low latency streaming over webRTC. This had a problem where it was downsampling my streams so the quality was bad and it was also not free so I abandoned that and I was back to being faced with securing the website.

I decided to move away from AWS as they block access to standard ports for https and such. I got a small instance with a2 hosting and followed this guide to get node.js set up after unsuccessfully using their c-panel system to accomplish the same thing. This worked and allowed me to keep developing now I knew the site and embedding worked. This however posed a problem for connecting the ESP as it seems the ESP cannot handle a secure websocket connection. I therefore wrote a relay node.js server that connected to the ESP and the remote server and bridged the gap, receiving the secure messages from the main server and relaying them over an unsecure connection to the ESP. I’m sure there is a more elegant solution to this but I didn’t have the time to find it.

You might think this is it… But a few days later I came back to do another test and it was broken again. This time no websockets would connect anywhere. The architecture is such that the browser calls the remote server and the remote server serves the web page. The web page then connects back to the server and establishes a websocket connection. This part kept failing no matter what I did despite me not changing anything. This whole exercise of hosting the project remotely had been to avoid hosting the site myself and messing with my parents network which is important for their work. If this piece were in a gallery or a formal setting it would have its own internet connection and the hosting would be port-forwarded to the server.

At the last minute, I happened to be watching Joe McAlister’s Raspberry Pi tutorial and he did a section on NGROK which turned out to be my saviour. It is a service which allows redirecting of hostnames to particular ports locally. It therefore allowed me to forward ports without messing with the local network. I wrote a config and a script which launch two NGROK port-forwards. One of these hostnames becomes the access URL and the other becomes the websocket address. This would be a single hostname if I paid for NGROK but I had spent enough on this project by this point.

The various windows displaying the status of the 3 servers that are running for everything to work.

Physical Setup / Hardware

I had two ideas for the physical manifestation of this piece, I had been thinking about water ripples but also about flames. I couldn’t decide which to make so I decided to start making both and decide down the line which to continue with as much of the code is identical. I already had most of the electronics ready from a previous project so it was simple to hook up both the gas valves and linear actuators to the ESP8266. I started with the flame project as this required less finessing and less actuators.

Elia (2000) - Ingvar Cronhammar

I was inspired to create a piece in flame after reading about Elia, a piece by Ingvar Cronhammar which is an 8m high flame which is triggered at random by a computer about once every few weeks. There exists very little video or images of it erupting but a record is kept on the web of past eruptions. Elia is metallic and industrial in its aesthetic, it seems futuristic like a UFO, it had a mystique to it.

See the flame project in this blog post.

This project got off to a good start when I nearly set fire to myself on the first test of the gas regulator I bought to adapt the canister to the rest of the system. The biggest hurdle I faced here is that the pipe that came from the gas canister was a different size to the screw on the valve. Normally with a problem like this I would take the pipes in question to a hardware shop and try different fittings, however all the shops are closed so this was not an option. I ordered several fittings online and 3D printed a wide selection but none provided a good seal. I eventually used a piece of flexible pipe and hose clamps to hold the pipe over the ends of the different fittings.

Once I got this going I wrote a test program for the ESP that toggled the valves individually to see if there was enough pressure. I was pleased that the fire did indeed work and the valves controlled the flames. The flames were rather small though and I decided this idea was too weather dependant as it would only be able to run on good days and outdoors. It also doesn’t strike me as something a gallery would like to have in their space due to the obvious safety implications. I fully intend to return to this project later on but I decided to focus on the water version of the idea for the time being.

I started with some very basic experiments to make sure that the actuators I wanted to use were waterproof and luckily they were. I then experimented with which parts of the actuators needed to be in the water. I had intended to have the ‘splashers’ float on the water however a couple of tests quickly showed me that floating objects won’t stay in one place, and also that 3D prints are not buoyant or watertight. I therefore decided instead to sink them in the water.

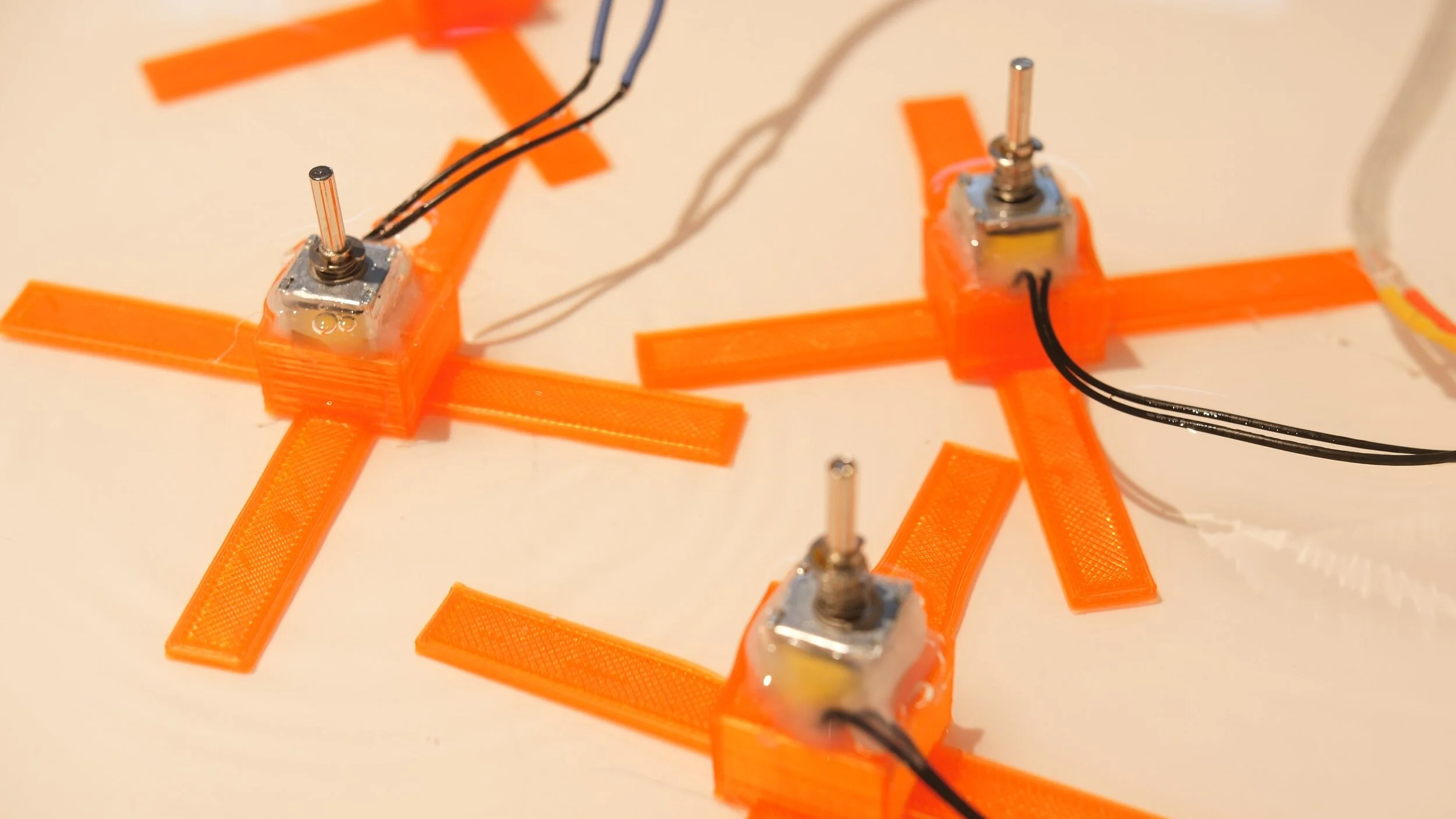

At this point I began testing the sort of light source I need to project a reasonable image on my ceiling of the water. I began with my phone torch which proved remarkably successful however it was very dim and difficult to capture on camera. I knew from this I needed a single point light source or one that I could focus to some degree. I therefore found a local company who rent theatre lights and hired a very standard spotlight called a source four. Once I had this set up, I discovered that the water needed very little agitation to create a big ripple in the projection. Therefore I moved away from having the actuators in direct contact with the water and made large feet for them to hold them in one place in one orientation. Just the vibrations moving through the mount into the water was enough to make a serious ripple.

The rest was rather straightforward. I set up a camera pointing at the ceiling and fed that into Open Broadcast Software using a capture card. OBS then filtered the video to increase the contrast before creating the live stream which would then be embedded behind the animations.

Link To Github : https://github.com/RobHallArt/Final-Project-Web-Node-ESP-Communication